架构指南#

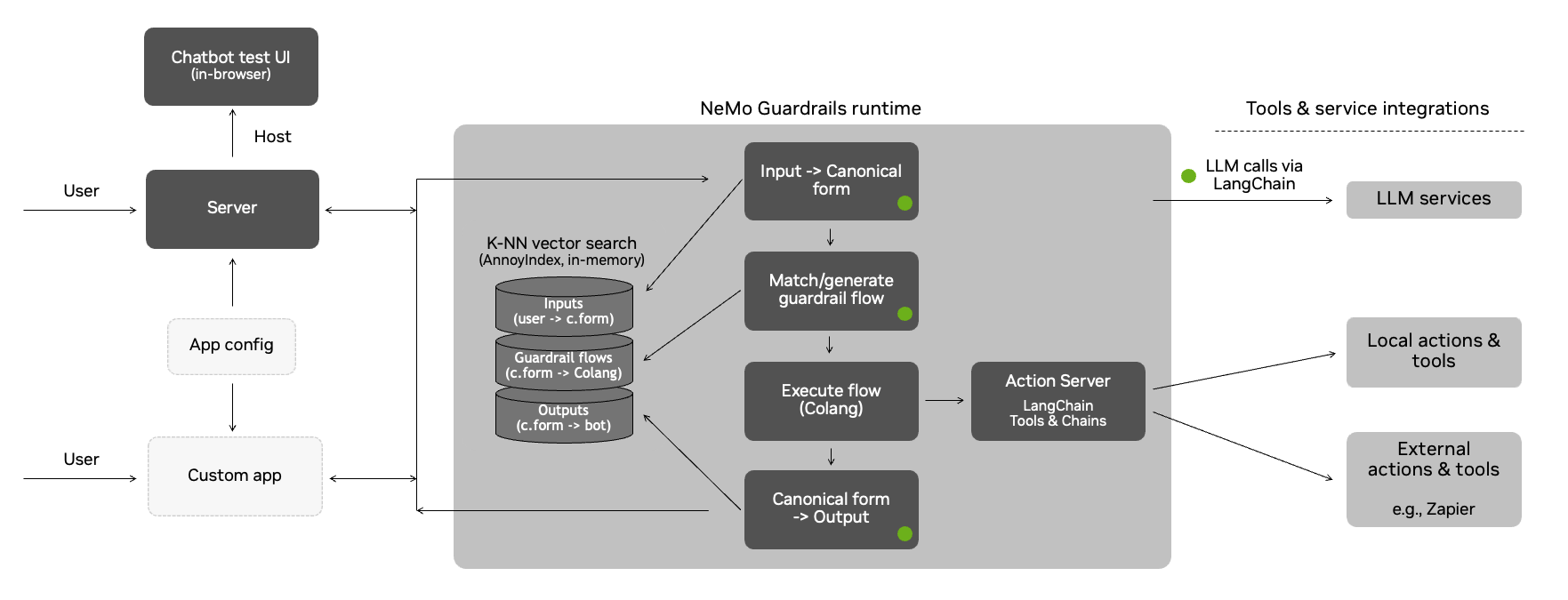

本文档提供了关于 NeMo Guardrails 工具包用于实现 guardrails 的架构和方法的更多详细信息。

Guardrails 流程#

本节详细解释了幕后的流程,从用户发送的语句到返回的 bot 语句。

guardrails 运行时使用事件驱动设计(即,一个处理事件并生成其他事件的事件循环)。无论用户何时对 bot 说些什么,都会创建一个 UtteranceUserActionFinished 事件并发送到运行时。

该流程有三个主要阶段

生成规范的用户消息

决定下一步并执行

生成 bot 语句

以上每个阶段都可能涉及一次或多次对 LLM 的调用。

规范的用户消息#

第一个阶段是为用户语句生成规范形式。这种规范形式捕获了用户的意图,并允许 guardrails 系统触发任何特定的流程。

这个阶段本身是通过一个 colang 流程实现的

define flow generate user intent

"""Turn the raw user utterance into a canonical form."""

event UtteranceUserActionFinished(final_transcript="...")

execute generate_user_intent

generate_user_intent action 将对 guardrails 配置中包含的所有规范形式示例进行向量搜索,取出前 5 个并将其包含在 prompt 中,然后要求 LLM 为当前用户语句生成规范形式。

注意:Prompt 本身包含其他元素,例如示例对话和当前的对话历史记录。

一旦生成规范形式,就会创建一个新的 UserIntent 事件。

决定下一步#

一旦创建 UserIntent 事件,有两条潜在路径

存在预定义的流程可以决定下一步应该发生什么;或者

使用 LLM 来决定下一步。

当使用 LLM 来决定下一步时,会对 guardrails 配置中最相关的流程执行向量搜索。与上一步一样,前 5 个流程被包含在 prompt 中,并要求 LLM 预测下一步。

这个阶段也是通过一个流程实现的

define flow generate next step

"""Generate the next step when there isn't any.

We set the priority at 0.9 so it is lower than the default which is 1. So, if there

is a flow that has a next step, it will have priority over this one.

"""

priority 0.9

user ...

execute generate_next_step

无论采取哪条路径,下一步都有两类

bot 应该说些什么(

BotIntent事件)bot 应该执行一个 action(

StartInternalSystemAction事件)

当需要执行 action 时,运行时将调用该 action 并等待结果。当 action 完成时,会创建一个新的 InternalSystemActionFinished 事件,其中包含 action 的结果。

注意:运行时的默认实现是异步的,因此 action 执行仅对特定用户阻塞。

当 bot 应该说些什么时,流程将进入下一个阶段,即生成 bot 语句。

执行 action 或生成 bot 消息后,运行时将再次尝试生成另一个下一步(例如,一个流程可能指示 bot 执行一个 action,说些什么,然后执行另一个 action)。当没有更多下一步时,处理将停止。

生成 Bot 语句#

一旦生成 BotIntent 事件,就会调用 generate_bot_message action。

与前几个阶段类似, generate_bot_message action 对 guardrails 配置中包含的最相关的 bot 语句示例执行向量搜索。接下来,这些示例会被包含在 prompt 中,然后我们要求 LLM 为当前的 bot 意图生成语句。

注意:如果在 guardrails 配置中提供了知识库(即,一个 kb/ 文件夹),则还会对最相关的文本块执行向量搜索,以将其也包含在 prompt 中(即 retrieve_relevant_chunks action)。

实现此逻辑的流程如下

define extension flow generate bot message

"""Generate the bot utterance for a bot message.

We always want to generate an utterance after a bot intent, hence the high priority.

"""

priority 100

bot ...

execute retrieve_relevant_chunks

execute generate_bot_message

一旦生成 bot 语句,就会创建一个新的 StartUtteranceBotAction 事件。

完整示例#

下面显示了处理用户请求的示例事件流。

用户与 bot 之间的对话

user "how many unemployed people were there in March?"

ask about headline numbers

bot response about headline numbers

"According to the US Bureau of Labor Statistics, there were 8.4 million unemployed people in March 2021."

由 guardrails 运行时处理的事件流(为便于阅读,已移除不必要的属性并截断了值,这是一个简化视图)

- type: UtteranceUserActionFinished

final_transcript: "how many unemployed people were there in March?"

# Stage 1: generate canonical form

- type: StartInternalSystemAction

action_name: generate_user_intent

- type: InternalSystemActionFinished

action_name: generate_user_intent

status: success

- type: UserIntent

intent: ask about headline numbers

# Stage 2: generate next step

- type: StartInternalSystemAction

action_name: generate_next_step

- type: InternalSystemActionFinished

action_name: generate_next_step

status: success

- type: BotIntent

intent: response about headline numbers

# Stage 3: generate bot utterance

- type: StartInternalSystemAction

action_name: retrieve_relevant_chunks

- type: ContextUpdate

data:

relevant_chunks: "The number of persons not in the labor force who ..."

- type: InternalSystemActionFinished

action_name: retrieve_relevant_chunks

status: success

- type: StartInternalSystemAction

action_name: generate_bot_message

- type: InternalSystemActionFinished

action_name: generate_bot_message

status: success

- type: StartInternalSystemAction

content: "According to the US Bureau of Labor Statistics, there were 8.4 million unemployed people in March 2021."

- type: Listen

扩展默认流程#

如 此处 示例所示,事件驱动设计允许我们介入流程并添加额外的 guardrails。

例如,在 基础 rail 示例中,在询问关于报告的问题后,我们可以添加一个额外的事实检查 guardrail(通过 check_facts action)。

define flow answer report question

user ask about report

bot provide report answer

$accuracy = execute check_facts

if $accuracy < 0.5

bot remove last message

bot inform answer unknown

对于高级用例,您还可以覆盖上述默认流程(即 generate user intent, generate next step, generate bot message)

Prompt 示例#

下面是 LLM 在规范形式生成步骤中如何被提示的示例

"""

Below is a conversation between a helpful AI assistant and a user. The bot is designed to generate human-like text based on the input that it receives. The bot is talkative and provides lots of specific details. If the bot does not know the answer to a question, it truthfully says it does not know.

"""

# This is how a conversation between a user and the bot can go:

user "Hello there!"

express greeting

bot express greeting

"Hello! How can I assist you today?"

user "What can you do for me?"

ask about capabilities

bot respond about capabilities

"I am an AI assistant which helps answer questions based on a given knowledge base. For this interaction, I can answer question based on the job report published by US Bureau of Labor Statistics"

user "Tell me a bit about the US Bureau of Labor Statistics."

ask question about publisher

bot response for question about publisher

"The Bureau of Labor Statistics is the principal fact-finding agency for the Federal Government in the broad field of labor economics and statistics"

user "thanks"

express appreciation

bot express appreciation and offer additional help

"You're welcome. If you have any more questions or if there's anything else I can help you with, please don't hesitate to ask."

# This is how the user talks:

user "What was the movement on nonfarm payroll?"

ask about headline numbers

user "What's the number of part-time employed number?"

ask about household survey data

user "How much did the nonfarm payroll rise by?"

ask about headline numbers

user "What is this month's unemployment rate?"

ask about headline numbers

user "How many long term unemployment individuals were reported?"

ask about household survey data

# This is the current conversation between the user and the bot:

user "Hello there!"

express greeting

bot express greeting

"Hello! How can I assist you today?"

user "What can you do for me?"

ask about capabilities

bot respond about capabilities

"I am an AI assistant which helps answer questions based on a given knowledge base. For this interaction, I can answer question based on the job report published by US Bureau of Labor Statistics"

user "how many unemployed people were there in March?"

请注意 prompt 中包含的各个部分:通用指令、示例对话、规范形式的最相关示例以及当前的对话。

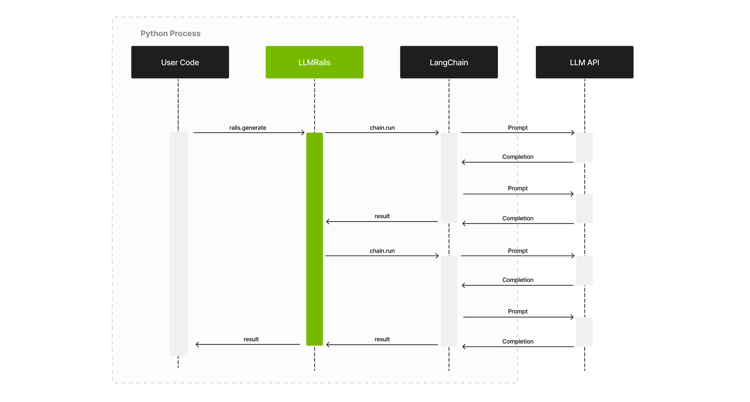

与 LLM 的交互#

该工具包依赖于 LangChain 与 LLM 进行交互。下面是一个高级时序图,显示了用户代码(使用 guardrails 的代码)、LLMRails、LangChain 和 LLM API 之间的交互。

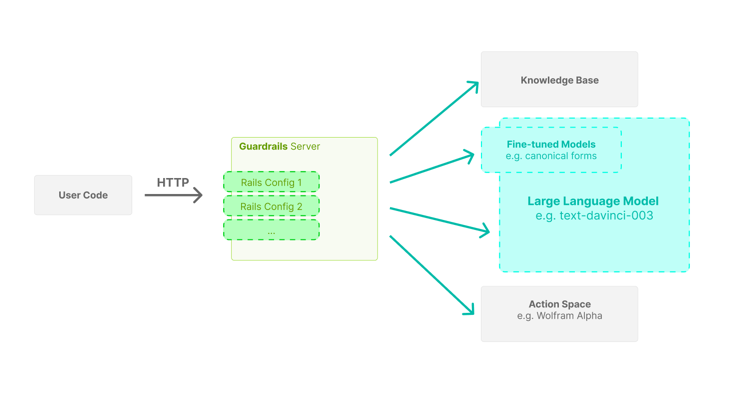

服务器架构#

该工具包提供了一个 guardrails 服务器,其接口类似于公开可用的 LLM API。使用该服务器,在您的应用程序中集成 guardrails 配置就像用 Guardrails 服务器 API URL 替换初始 LLM API URL 一样简单。

服务器设计时考虑了高并发性,因此使用 FastAPI 进行了异步实现。